VoteWell

votewell.cakieran/votewell 7 years ago

Votewell is a strategic voting tool for elections where the left vote is split amongst several parties. It's published for elections in Canada, and recently also for the 2019 UK General Election

The problem

Canada, for example, has several national parties: one conservative (right-leaning) party, and three progressive (leftist) parties. It's very common for the left vote to be fragmented among the three, resulting in conservative wins despite not having a majority of the votes.

The problem is a well-understood consequence of first-past-the-post voting, which many countries (including Canada and the UK) still use today. There are excellent and entertaining illustrations of this problem on the Internet already, so I won't attempt to cover it here.

An information problem

The whole point of voting is for people to communicate their preferences to the government, so they may be fairly represented in parliament.

The issue with the current system is that we're losing important information when we cast our vote. Imagine the following ballot:

[x] Party A (Left)

[ ] Party B (Left)

[ ] Party C (Left)

[ ] Party D (Right)

We can assume one of two things from this ballot:

- The voter either wants

Party Ato represent them, or - The voter does not want one or more parties

B,C, orDin power

Strictly from the perspective of encoding information, we can do better.

In this scenario, it's typical for the intent of the voter to be closer to the following:

- I would prefer

Party Ato win - Either party

BorCwould be ok - I absolutely do not want

Party Din power

One way to better communicate these preferences is by using a voting system that incorporates a ranked ballot.

[1] Party A (L)

[3] Party B (L)

[2] Party C (L)

[ ] Party D (R)

Here we have a lot more information encoded in the ballot. We can now know:

- The voter's preferred choice (

A) - The voter's alternative choices (in order,

CandB) - Any party the voter does not support (

D)

Strategic voting

Strategic voting is essentially a manual version of Instant-runoff voting - where you transfer your vote to any acceptable party who's likely to win.

It's very common to vote strategically in Canada, but the problem is you need to know who‘s likely to win in your riding before you vote.

Enter VoteWell

The method for determining the leading leftist party is fairly straightforward, but involves a lot of manual work. I wrote VoteWell to automate these steps:

- Determine what riding you‘re in

- Collect polling data

- Predict the likely election results per riding

- Figure out if strategic voting is necessary in your riding

- Determine which party to vote for, if necessary

Polling data

There are lots of election prediction blogs in Canada, each with their own strengths. They all use their own blend of statistics and/or machine learning to weight and cast polling data in different ridings.

After surveying the lot, I decided to use Calculated Politics as the source of my prediction data. They had what seemed like a good methodology, and they published per-riding prediction data.

I wrote a

quick regex

to parse the prediction data, distilling it into a single JSON file.

Displaying the data

React was a natural fit for this project. By moving all the data, logic, and UI into the browser, I‘d be able to get by with static hosting. Any worries I had about potential traffic spikes would be essentially moot, since CDNs are designed and built for heavy static load. Although I did end up introducing a server component later, the decision to go static/CDN continued to pay huge dividends.

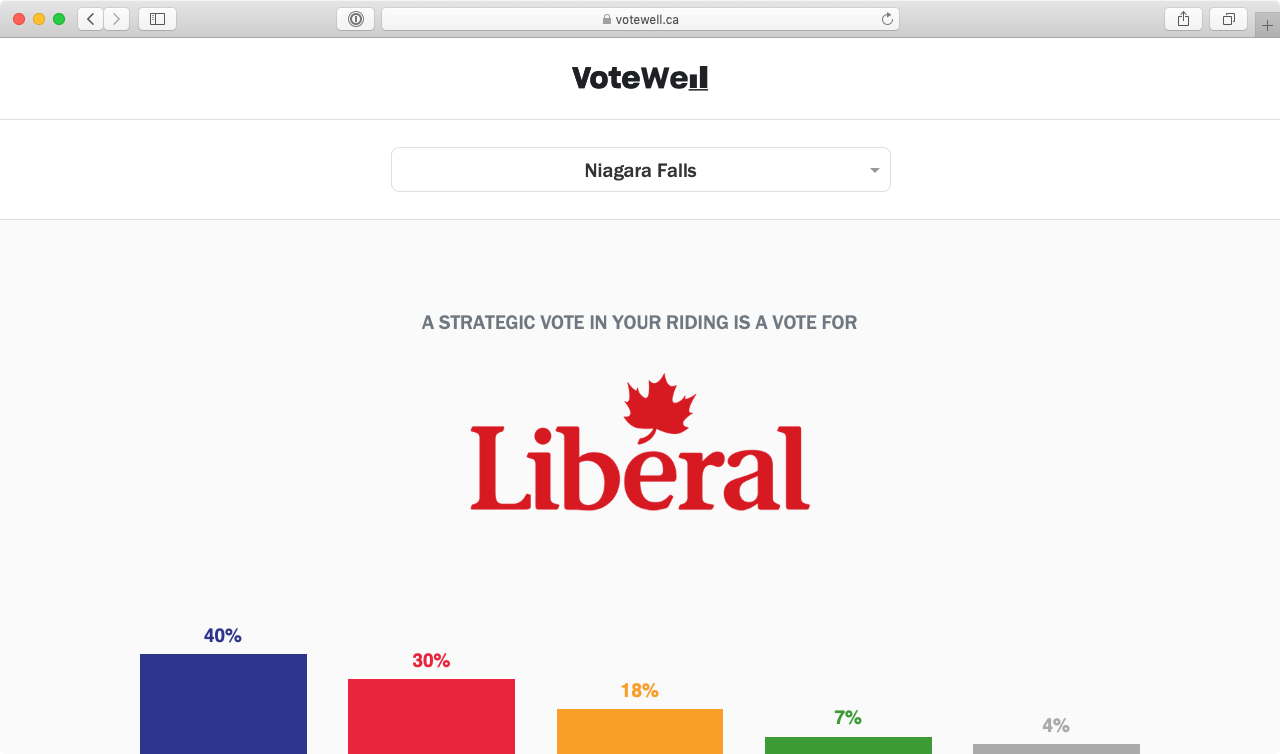

In the most basic sense, the site allows you to select your riding, consults the data we previously collected, computes your best option, then tells you who you that option is. An extremely high-fidelity mockup follows:

A vote in is a vote forParty B! -------- ##### ##### ##### ##### ##### ##### ##### ##### ##### ##### A B C D

Add to that some extremely basic sass styling and the project has reached MVP status. That means it‘s time to...

Ship it™

We're spoiled for choice for hosting static sites lately, but nothing quite matches the convenience of Netlify.

As an added bonus, Netlify's Github integration & automated build process fit my needs perfectly. By default, they'll take any push to your master branch on GitHub, run npm run build, and deploy the resulting ./dist folder.

After creating the site on Netlify, adding a parcel build command to my package.json was all it took to get to get production deploys working:

{

"name": "votewell",

...

"scripts": {

"start": "npx parcel serve ./index.html",

"build": "npx parcel build ./index.html"

},

"dependencies": { ... }

}

You can run npm run build yourself locally to see the output.

Really, any static host would work at this point, but I can't think of a reason not to use Netlify. This is not a paid placement, but I'm aware it sounds like one 🤣.

loop do { ... }

Now that VoteWell is "in the wild", the bulk of the work can begin 🛠️. Here are some iterations, in no particular order.

Geolocation

Almost nobody knows what riding they‘re in. Fortunately, all you need to figure that out is their location and the right map. And modern browsers can tell you their location!

Ideally, I wanted a way to default the riding selector to where the user was. That way, if they wanted to look at the results for other ridings, they could just select it by name. Also, if the geolocation failed (or was denied) everything still worked, just not as well. I like to think of UI enhancements as escalators - if they fail they should still work as stairs.

I found an ESRI Shapefile of the federal electoral districts via

Open Data Canada.

Now all I had to do was figure out which shape (riding) contained a provided lat/lng.

Hoping to keep this work in the browser, I looked around for a JS solution and found Turf. Basically, it will load a bunch of geoJSON shapes into memory, and allows you to find the shapes that contain any given point.

First hurdle: Turf expects geoJSON data, and I have an ESRI Shapefile. Without getting too detailed, this is not a trivial conversion. Fortunately, a tool exists to make the necessary conversion. I made a note on how to install / run the tool in the README.

Even after simplifying the shape data, it still takes up a whopping 1.3 MB, or ~500kb gzipped. Certainly possible, but less than ideal when all you really care about is a ~20 byte riding name.

I moved the lat/lng -> riding resolution into an API call, and deployed it to AWS Lambda via up.

Lambda is great because it seamlessly auto-scales from 0 to... a lot, then back down to 0 again.

The downside to lambda is that it tends to get a little cost-inefficient under high load.

Fearing high traffic, I moved the service to a tiny lightsail instance instead (the $3.50/mo one). It was a nice predictable Ubuntu environment running nodemon. It never broke a sweat, hovering around 1% load. Clearly overkill, and being a VPS, it's always running.

What I really wanted was a hybrid of the two - a VPS that auto-scaled up, then down to 0 for the off-season. I ended up finding that in Google Cloud Run.

It basically works like lambda, but instead of a single function it exposes a running Docker container. You're charged in typical 100ms windows (with a generous free tier), and it pauses the instance after every request. One thing that differentiates it, though, is that it can handle concurrent requests per instance. If you have several requests that all complete within the same 100ms window, you're only billed once (while the instance is live).

Of the three, the UX for up was certainly the best. GCP is much rougher around the edges, but the usage fit was too hard to ignore.

Another small change I made was moving the lat/lng -> riding resolution itself into mongo. Shedding the constraints of working in a browser environment opened up some great options wrt: geoJSON, and mongo has some really fantastic geoJSON index support.

Lookups became at least an order of magnitude faster, which is nice.

The biggest hurdle in moving to Cloud Run was probably getting the mongo service to run inside the Docker container. Arguably, running both services inside one container is a distinct anti-pattern, but it fits this use case. I ended up

abusing job control

in bash to this effect.

Netlify: a caching proxy?

I discovered a really great feature of Netlify during all this: it can act as a caching proxy! If you specify a redirect rule in your netlify.toml with a code of 200, Netlify will proxy the request for you. What's more, if you set Cache-Control headers it will cache the results on the same CDN it uses to host your static files. This single feature ended up intercepting about 80% of my geolocation requests, cutting my server load by a factor of 5.

/api/1 ⟶ [Netlify] ⟶ [Server] ⟵ [Netlify] ⟵ /api/1 ⟶ [Netlify] ⟵ (cached!) /api/2 ⟶ [Netlify] ⟶ [Server] ⟵ [Netlify] ⟵

Another nice benefit is that you don't need to bother with the CORS dance.

Design

The effect good design has on a product is difficult for me to articulate, but it's absolutely a force multiplier.

I was super fortunate to catch the attention of my friend, the extremely talented Arthur Chayka, who took it upon himself to lend me his design direction and expertise.

I'm sure VoteWell owes a lot of its traction to Arthur's design chops.

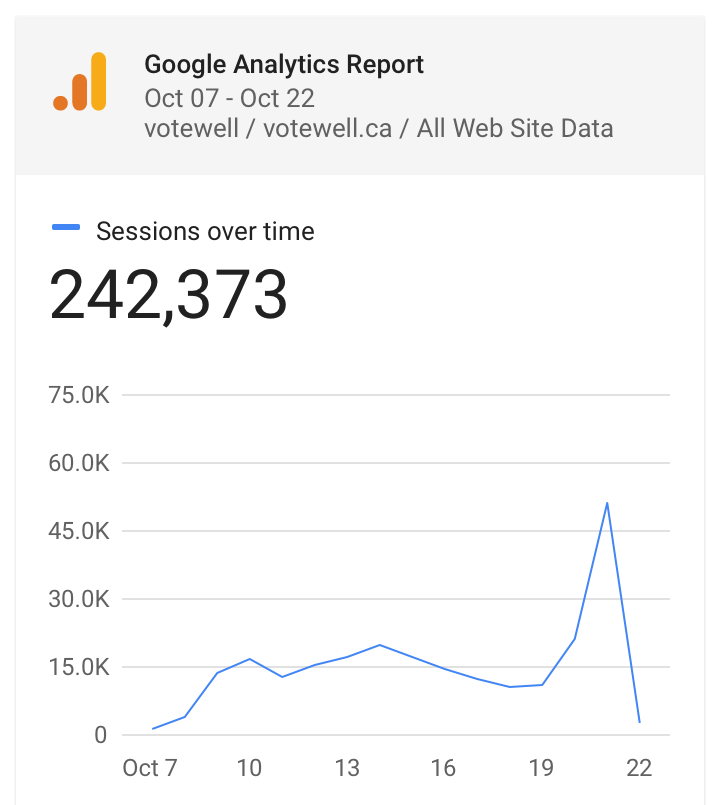

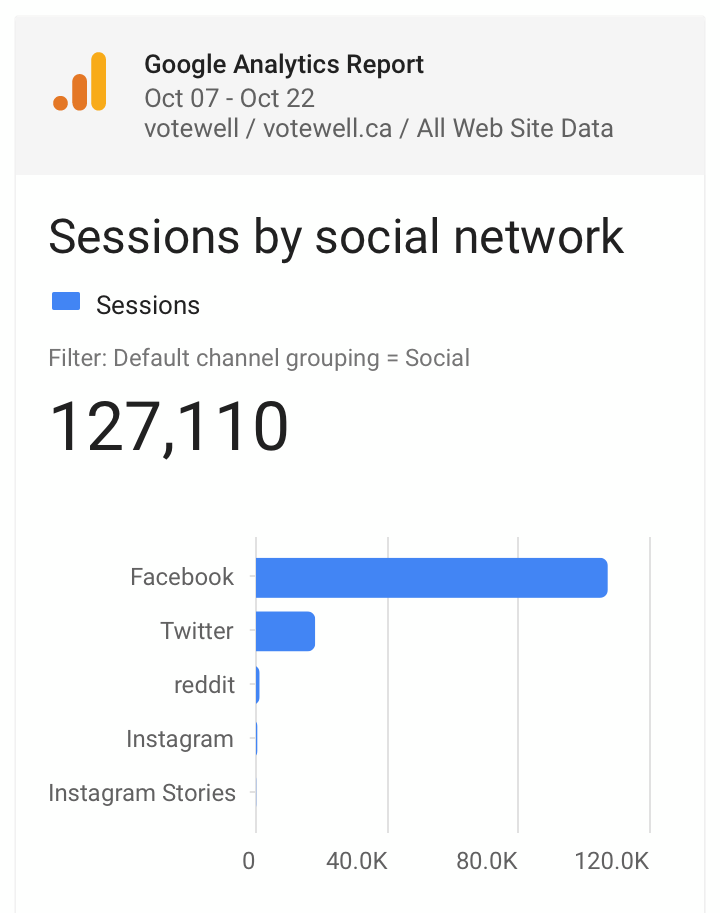

Measure all the things!

I‘m admittedly a bit of an Analytics Noob, but I always make sure at least the most basic Google Analytics agent is present.

Lots of interesting information can be gleaned from the default analytics.

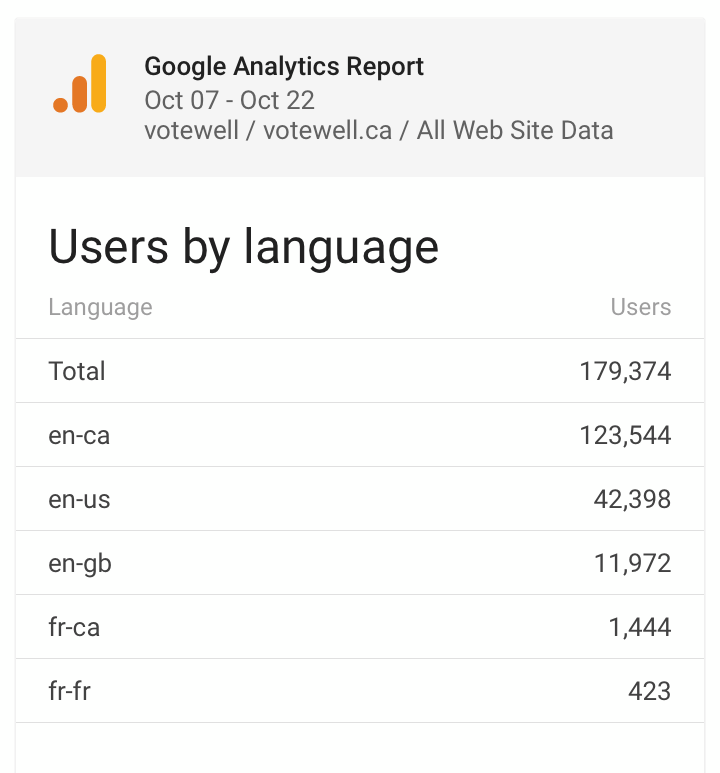

For example: I delayed launching the site until I had French translations, assuming about 20% of my traffic would be French (which is in line with census data). In retrospect, I should have launched right away, then prioritized according to the actual data.

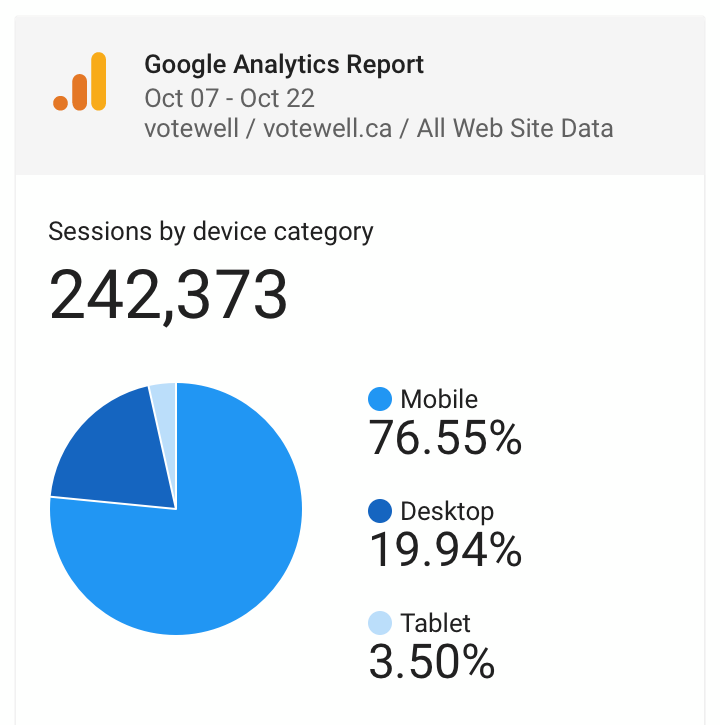

I'm always surprised by just how dominant mobile traffic is. I'm not sure why I have this expectation bias towards desktop, maybe because I develop on it? I need to get with the early 2000's and start designing mobile first already.

Error tracking

Another thing to file in the "I wish I had always just done this" folder is error / exception tracking. Sentry is the only one I've had a decent amount of experience with, and their free tier is certainly enough to get you going.

You'll get a bit of noise from misbehaving browser extensions, but catching those early head-smacking errors is absolutely worth it.

Bonus points for tagging the user context (if applicable) so you can follow up personally with news of a fix.